Crawl budget optimization has always been at the centre of all SEO efforts. And why not! If your pages exceed your site’s crawl budget, search engines will not index the balance, severely affecting your site’s performance on the SERP.

So, if you have a huge website (like an ecommerce site), just added new content, or many redirects on your site, you have a reason to be concerned about your crawl budget.

Of the many strategies used to optimize crawl budget, log file data is the most underutilized tool that can offer a wealth of insights to assist you in your SEO efforts. It can help you find orphaned pages, reveal tag-related problems, identify indexability issues, and see how bots crawled the website and where crawl budget was spent/wasted. What’s more? A regular log file analysis can help you detect a shift to the mobile-first index by looking at the specific bot user agent.

Regardless of technical updates you conduct on your website, server logs show how they are perceived by search bots. This helps SEO professionals fix issues based on real data, not just on hypothesis.

Read on to know more about log files and how log file analysis can improve your SEO efforts.

Table of Contents

What Is a Log File?

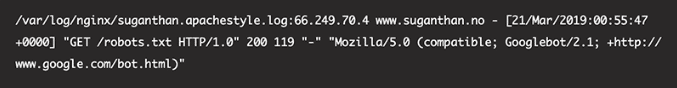

A server log file is essentially a file output from the web server consisting of a record of all the requests (hits) that the server has received, including pages, images, and JS files. Simply put, a log file is a pile of juicy data that gives you an unfiltered view of the traffic to your website.

So, when a user enters a URL, the browser translates it into three parts. Say, we have a URL – https://jetoctopus.com/security

- Protocol (https://)

- Server name (jetoctopus.com)

- File name (security)

The server name is converted into an IP address. This allows a connection between the browser and the web server. When an HTTP Get request is sent to the web server with the HTML being returned to the browser. This is interpreted in the form of the page seen on your screen. Each of these requests is recorded as a ‘hit’ in the log file.

Thus, this file carries every request made to your hosting web server. All you need to do is export the data and use it to improve your site’s SEO.

Depending on the hosting solution or server you use, log files are usually stored automatically and are available for a certain time period. Hence, they are available to technical teams and webmasters. They can be easily downloaded or exported in the .log file format using a log file analysis tool.

How Can Log File Analysis Help Optimize Crawl Budget?

The log file can help you analyze search engine bot activity by offering valuable information that’s otherwise unavailable through a regular site crawl. Since log file analysis offers real insights pertaining to search engine crawlers, it answers the top concerns on an SEO professional’s mind.

- Am I spending my crawl budget optimally?

- Which web pages are yet to be crawled by Google?

- Are there any accessibility errors I am unaware of?

- Are there any areas of crawl deficiency that need to be addressed?

Uncovering these insights and more can help you adjust your SEO strategy, allowing the search bots to find the pages that matter the most.

Using Log File Analysis to Optimize Your Crawl Budget

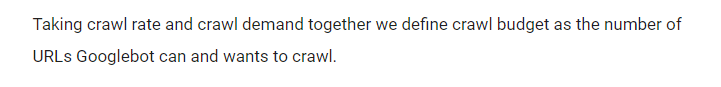

Crawl budget is the number of webpages a search engine bot crawls each time it visits your website. Google defines crawl budget as –

At times, you may have created new content and have no budget left. This will prevent Google or any other search engine from indexing and crawling these pages. Hence, it is critical to monitor where you are spending your crawl budget using log file analysis. Here’s a handy Log File Analysis checklist to follow along.

Know What to Expect from Log File Analysis

As I mentioned earlier, a log file is a juicy gigantic pile of data. If you overindulge, you can easily lose track of what you are truly trying to use it for – optimizing your crawl budget! As a result, it’s critical to know what to expect from a log file and what not to.

You can use log file for –

- Locating spider traps. Log files can give you insights into how search engine bots are crawling your website.

- Identifying static resources that are being crawled too much.

- Identifying spam content. If a spammer or hacker has placed a bunch of spam pages on your site, clicks on those pages will be recorded in the log file.

- Identifying broken links. Broken links, whether external or internal, can bring down your site authority and ruin your crawl budget. A peek into the log file can quickly reveal such busted links.

- Spotting 404 and 500 errors. A log file can help show you the faulty server responses which even Google Search Console (GSC) may miss at times.

You don’t need a log file for –

- Tracking conversions. You don’t need a log file to track conversions. Though it’s feasible, I wouldn’t recommend that. You have several other tools for that.

- Tracking and analyzing location data. Though a log file can help you with this, most analytics software can help you accomplish this task with ease.

- Tracking click paths. Again this can be achieved with a lot less work using analytics software like Google Analytics.

1. Identify Where Your Crawl Budget Is Being Wasted

Several factors, namely accessible URLs with parameters, hacked pages, soft-error pages, on-site duplicate content, and spam content among negatively affect a site’s crawling and indexing. Such factors can drain your crawl budget, delaying the discovery of good content on your site.

A log file analysis can help you identify the reasons for the wasted crawl budget and take the necessary steps to manage them.

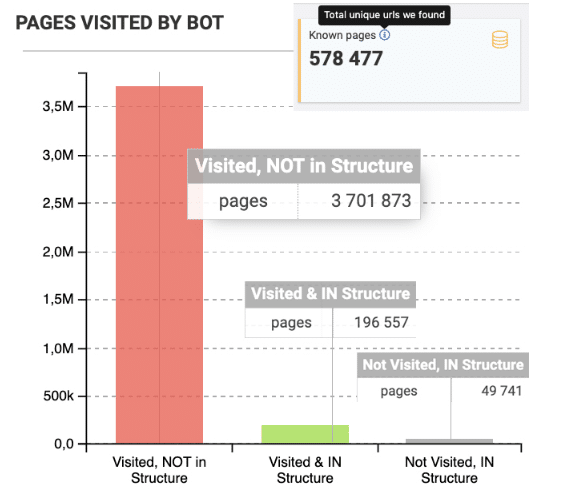

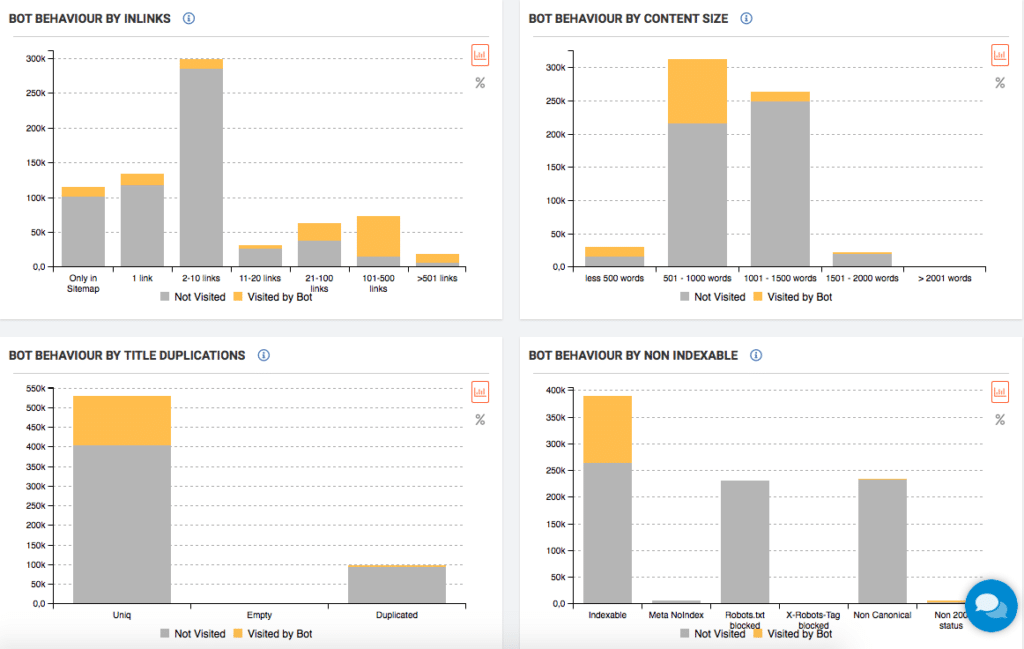

Crawler found 580K indexable pages on a website of which only 197K pages were in the website structure and frequently crawled by bots. There are 3,7M orphaned pages that aren’t reachable through links but visited by bot. Without a doubt, the crawl budget is wasted here. This is a widespread issue among big websites with a history of redesigns and migrations. Such issues can be easily identified using server log file analysis.

2. Determine Whether Priority Pages Are Being Crawled

Google or any other search engine crawling pages that are of low-value is a waste of your crawl budget. Use the data in log files to analyze whether or not your high-value pages are being visited.

For instance, if your site’s objective is generating leads, your homepage, services page, Contact Us page, and blog should have a high ‘Number of Events’. Similarly, an ecommerce site would prioritize their category and key product pages along with the above-mentioned ones.

3. Eliminate Page Errors

Reviewing your site crawl helps you find unresponsive pages that may have 301, 400, or 500 errors. A log file analysis can help you take a look at each of these pages which can then be fixed or redirected, allowing the search bots to crawl into the right locations.

This simple step will help search engines find your webpages, thereby optimizing your crawl budget.

4. Improve Website Indexability

Indexing is when a search bot crawls your website and enters data about it in its index or search engine database. It goes without saying, if your website isn’t indexed, the search engine bots will be unable to rank it in the SERP.

Though GSC’s URL Inspection tool offers crawl and indexing data, it’s not as detailed as that provided by log file analysis. Your page’s indexability is impacted by several factors, including ‘noindex’ meta tags and canonical tags. Use a crawling tool in combination with the log file data to determine disparities between crawled and indexed pages.

Using this data, consider the following steps to improve your crawl efficiency.

- Make sure Your GSC parameter settings are up to date.

- Check if there are any important pages included as non-indexable pages.

- Add disallow paths in your robots.txt file. This will prevent the pages from being crawled, saving your crawl budget for priority pages.

- Add relevant noindex and canonical tags to indicate their level of importance to search engines. However, noindex tags do not work well in case of multimedia resources, namely videos and PDF files. In such cases, you can count on robot.txt.

- Spot disallowed pages that are being crawled by search bots.

5. Reduce the Negative Impact of Site Migration

If you are planning a website migration to a new CMS, log file analysis can help you get rid of the inevitable errors and bugs encountered during the process. Post the site migration, log file analysis allows you to keep an eye on crawl stats, ensuring that the search bots are crawling the new site’s pages.

Combining crawls with the log data can throw light on the pages search engine bots aren’t crawling and orphaned pages (pages not linked internally). These insights can help you boost your crawl budget.

6. Keep a Check on the Site’s Technical Health

Usually, search professionals get to know about the crawlers introduced by search engines through GSC when there’s a change in their organic traffic. Further, developers don’t have much understanding of technical terms, namely tags, site indexation, and canonicals.

A detailed study of the log files can tackle this issue, allowing you proactively fix SEO problems on the go.

A Few Other Points to Bear in Mind

Check the Robot.txt Settings

Robot.txt is a file that tells crawlers not to crawl certain pages. However, it’s important that this file is set up correctly, failing which you encounter crawl and indexation issues.

‘Pages cannot be crawled due to robot.txt restriction,’ is one of the most common errors encountered in Google Search Console. Such errors can be easily identified and eliminated using server logs which provide a list of robots.txt directives. The data clearly indicates the URLs that are blocked or non-blocked by robots.txt.

Check the Number of Pages Getting Bot Visits versus Those Getting SEO traffic

More often than not, websites having multiple pages have a limited number of pages getting real SEO traffic. A bulk of the balance webpages are low in terms of SEO value. Identify such pages and delete the ones that don’t get traffic to your site. This will hugely improve your site’s indexation.

Invest in a Suitable Log Analysis Tool

Log file analysis isn’t a part of your regular SEO reporting. Therefore, it requires you to manually go through the data to understand the trends. But the data available to you is huge, remember?

Imagine this scenario. You have a website with approximately 6000 a day. If each of these visitors visits at least 12 pages, you will have log file entries for 72 thousand records. That’s a whole lot of work for anyone using the Excel sheet.

All this work of analyzing logs can be managed effortlessly by an effective log analyzer tool. These automated tools can help you comb through your log files and procure data on the same platform as your SEO reporting. This will help you see how bots access your site and whether or not your crawl budget is being spent efficiently.

Check out how these tools overlap crawl data with logs to offer interesting insights pertaining to the crawl ratio. Such insights can help you tone the SEO strategy and optimize your crawl budget.

Summing Up

Log file analysis is rarely touched upon by an average SEO professional. But that’s what is the difference between merely guessing and precisely knowing how search engine crawlers behave on your website. Don’t miss out on the wealth of data and insights available through a simple log file analysis.

Use the tips and strategies shared in this post to spy on the bots and up your SEO game.